What is the GPU engine and why should we use it?

The GPU engine is an alternative to its CPU counterpart. It allows you to use your GPUs (graphics cards) to compute the renders. They are usually much much faster than the CPUs, especially when using more than one graphics card. In this version, many lines of code have changed in order to make the GPU engine even faster and to allow for rendering bigger images.

Not all the graphics cards will work; please check the next section to know about the details.

GPU engine hardware requirements

Graphics cards have to be based on CUDA. The GPU engine is built upon CUDA computing platform, which is developed by Nvidia, so only Nvidia graphics cards will work. AMD or Intel cards won't work.

Maxwell, Pascal, Volta and Turing micro-architectures are supported (compute capability 5.0 and up). Kepler architecture is not supported but cards based on it could work.

It's very important that graphics card drivers are up to date.

Here is an illustrative table with most of the supported graphics cards: Table of supported GPUs

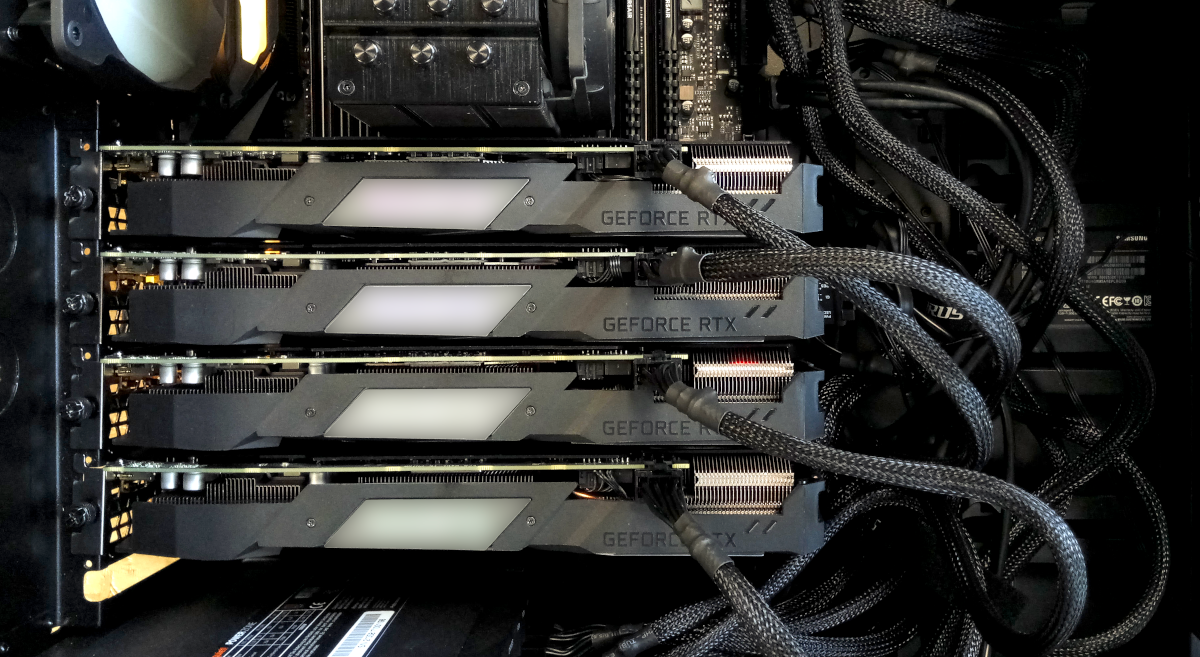

Multi-GPU

Maxwell now allows you to use all the graphics cards on your computer to calculate the render.

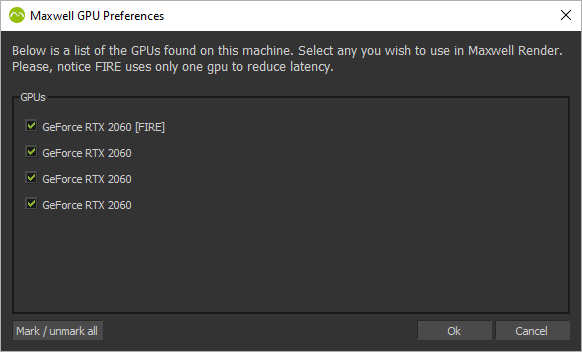

In order to choose which graphics cards you want to use, you will find a gear icon next to Engine selector in Render Options, when the GPU engine is selected. The plugins have a similar button on their equivalent menu.

Customize your GPU preferences here (capture from Maxwell Studio)

This button will open the Maxwell GPU Preferences panel.

It will set Maxwell's behavior on a particular computer for all Maxwell apps and plugins. These settings are not sent within a scene file or distributed across the network as each computer can have very different configurations.

In the case of FIRE (the interactive preview), Maxwell will only use one of the cards (the best one) and will be marked with [FIRE] tag.

Just mark the ones you want to use and leave unmarked the ones you want to spare.

This panel can also be opened from the Maxwell installation folder by running the “mxgpuprefs” application.

GPU Preferences panel

| Info |

|---|

Please, note that the memory is not shared between the different cards, so the graphics card with a smaller amount of memory will be the bottleneck for the render. The whole scene has to fit into each used graphics card's memory. |

Will I get the same results using the GPU and the CPU engines?

Our aim is to make them render exactly the same result, however, the nature of the two engines is not the same.

Additionally, not all the features available in the CPU engine are supported yet in the GPU engine. We will keep working to add items to the list of supported features.

Here is a table summarizing the features currently supported or not supported in the GPU engine:

Materials | Environment | Extensions | Channels | Channels | Camera | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

Referenced MXM | Physical Sky | Maxwell Scatter | Alpha | Deep | Stereo lenses | ||||||||||||

Normal layer mode | Constant Dome | Maxwell Sea | Z-buffer | UV | Simulens | ||||||||||||

Additive layer mode | Image Based | Maxwell Grass | Shadow | Custom Alpha | Camera Response | ||||||||||||

Layer opacity and masks | Rendering | Maxwell Hair | Material ID | Reflectance | Tone mapping | ||||||||||||

Transmittance | Interactive render (FIRE) | Asset Reference | Object ID | Camera | Miscellany | ||||||||||||

AGS | Production render | Subdivision modifier | Triangle ID | Thin Lens | Render Booleans | ||||||||||||

Sub-surface scattering | Network Rendering | Maxwell Cloner | Motion Vector | Pinhole | Motion Blur * | ||||||||||||

Dispersion | Multi-GPU | Maxwell Volumetrics | Roughness | Ortho | Region Render | ||||||||||||

Procedural textures | Denoiser | Maxwell Particles | Fresnel | Fisheye | Hidden to Camera | ||||||||||||

Displacement (pretess mode) | Multilight ** | Maxwell Mesher | Normals | Spherical | Hidden to Refl/Refr | ||||||||||||

Coatings | Maxwell Cloud | Position | Cylindrical | Hidden to GI | |||||||||||||

| Info |

|---|

* Motion blur works for all transformations but not for deformation. ** Only Intensity Multilight mode is supported, not Color Multilight. |

A tip regarding antialiasing (a little hack)

The antialiasing when using the GPU engine differs from the one used with the CPU engine. However, there is a bit hidden way of tuning it.

You can do it from Maxwell Render, while the render is running or after it has finished. Go to the Camera panel > Overlay Text section but do not activate it. In its text box, enter for example, "yes 1 1" (without quotes) and then refresh the render. The first value can be 0, 1, 2 or 3 and changes the type of antialiasing according to the table below; the second value can be between 0 and 2, but it can have decimals (it can be understood as the opacity or the intensity of the pixels of the antialiasing).

First value | Filtering type |

|---|---|

0 | Filter Gaussian Diagonal |

1 | Filter Mitchell Diagonal |

2 | Filter Cuadratic Beta Spline Diagonal |

3 | Filter Cubic Beta Spline Diagonal |

Which card should I buy to use with the GPU engine?

Only Nvidia, no AMD or Intel, sorry.

As usual, the bigger the numbers, the better, but which numbers do really affect the render?

The memory of the graphics is crucial as the whole scene has to fit in it to be able to render, so the higher the better. The amount of memory is not relevant for the speed of the render though; this will affect the size of the image you can render, the amount of geometry you can load and the number and size of the textures that can be loaded to render.

The higher the number of CUDA cores and their speed, the better. This will determine the rendering speed for that particular graphics card.

The size of the memory interface and its bandwidth will also affect speed as it affects how fast the information travels between the memory and the cores. The higher the better.